Datasets

Abstract: Recent years have seen a focus on research into distributed optimization algorithms for multi-robot Collaborative Simultaneous Localization and Mapping (C-SLAM). Research in this domain, however, is made difficult by a lack of standard benchmark datasets. Such datasets have been used to great effect in the field of single-robot SLAM, and researchers focused on multi-robot problems would benefit greatly from dedicated benchmark datasets. To address this gap we design and release the Collaborative Open-Source Multi-robot Optimization Benchmark (COSMO-Bench) – a suite of 24 datasets derived from a state-of-the-art C-SLAM front-end and real-world LiDAR data.

The website itself serves as a convenient interface to download the COSMO-Bench datasets. However, the datasets themselves are hosted in-perpetuity at: KILTHUB

COSMO-Bench Datasets

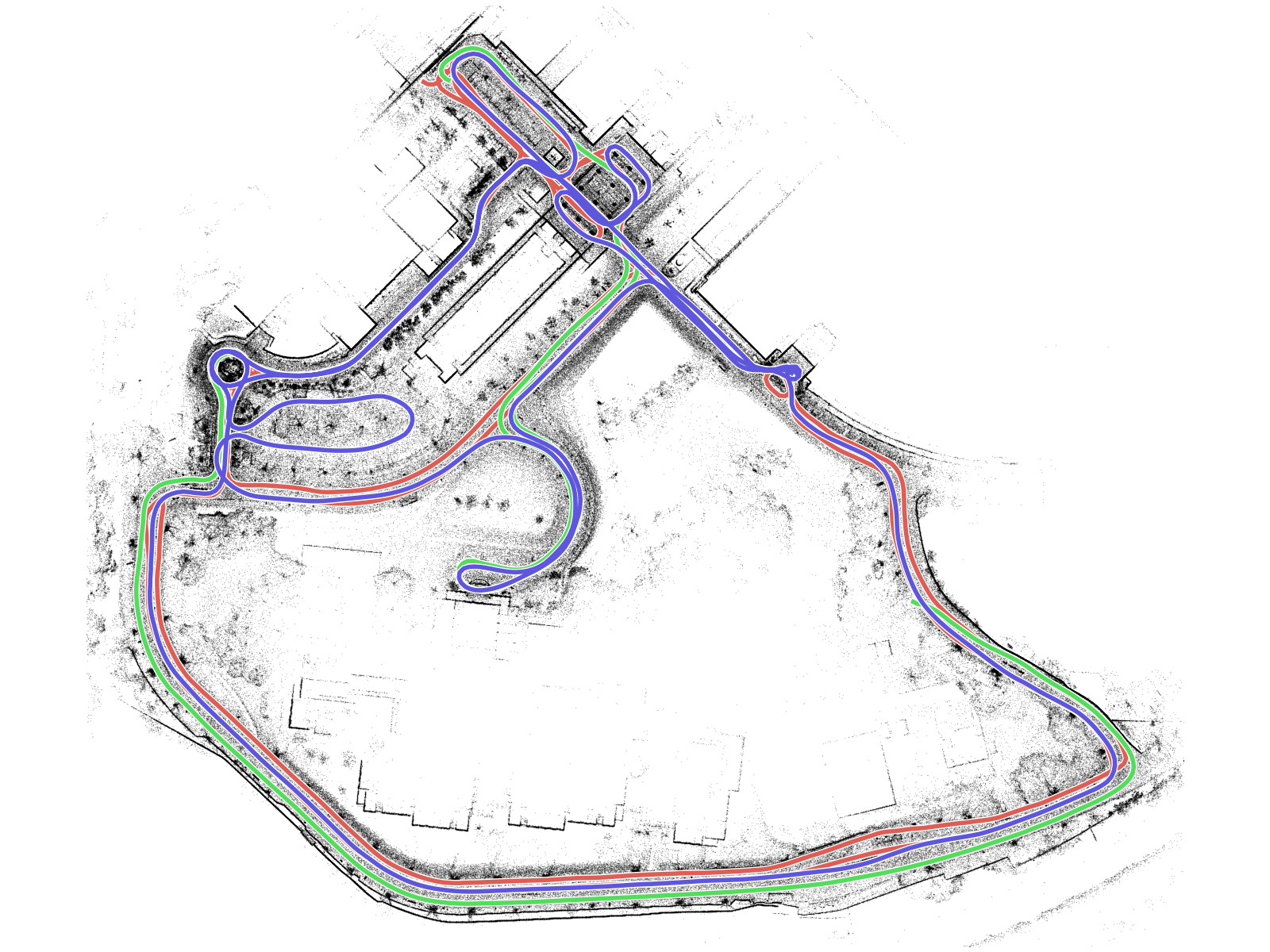

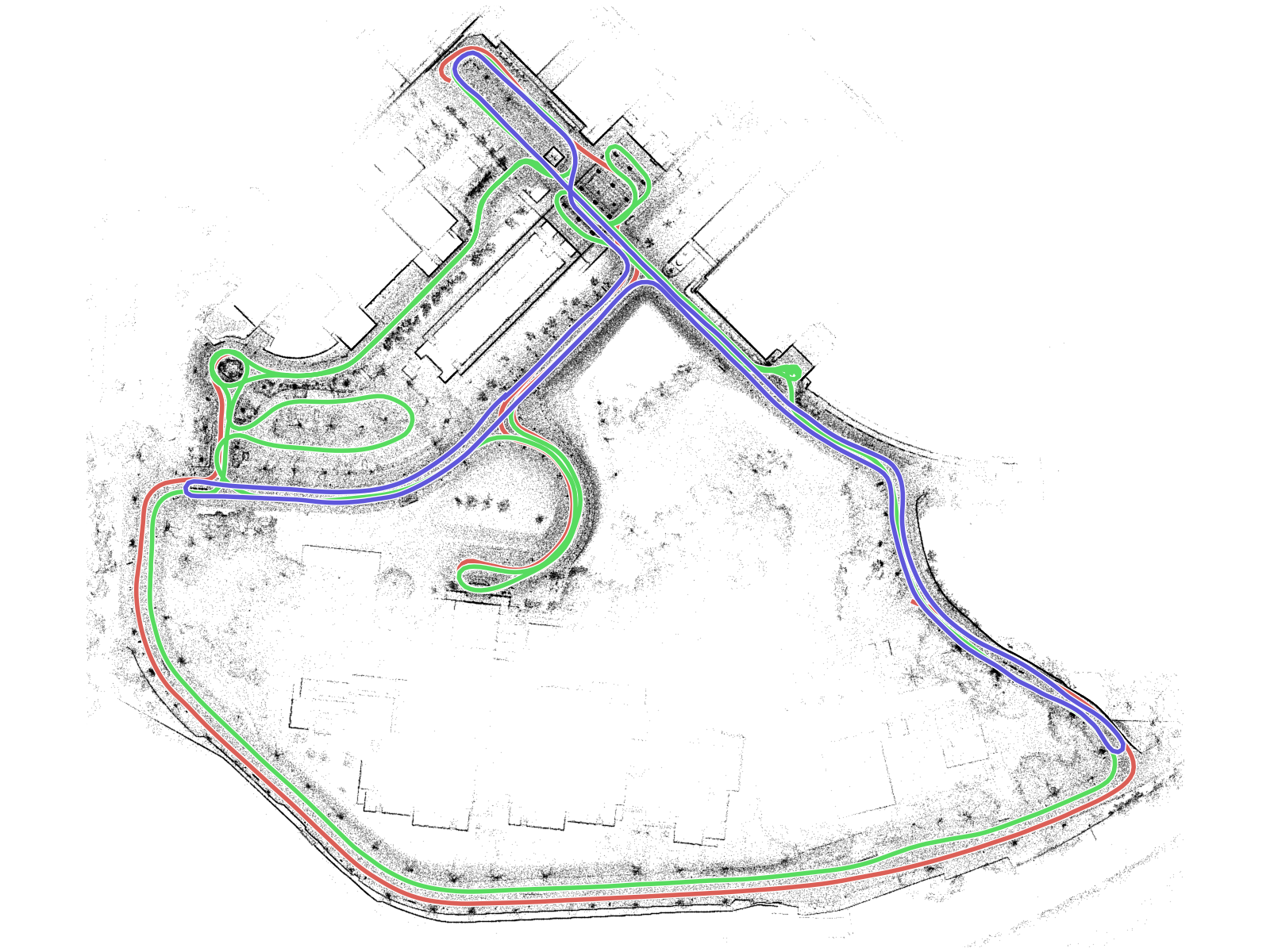

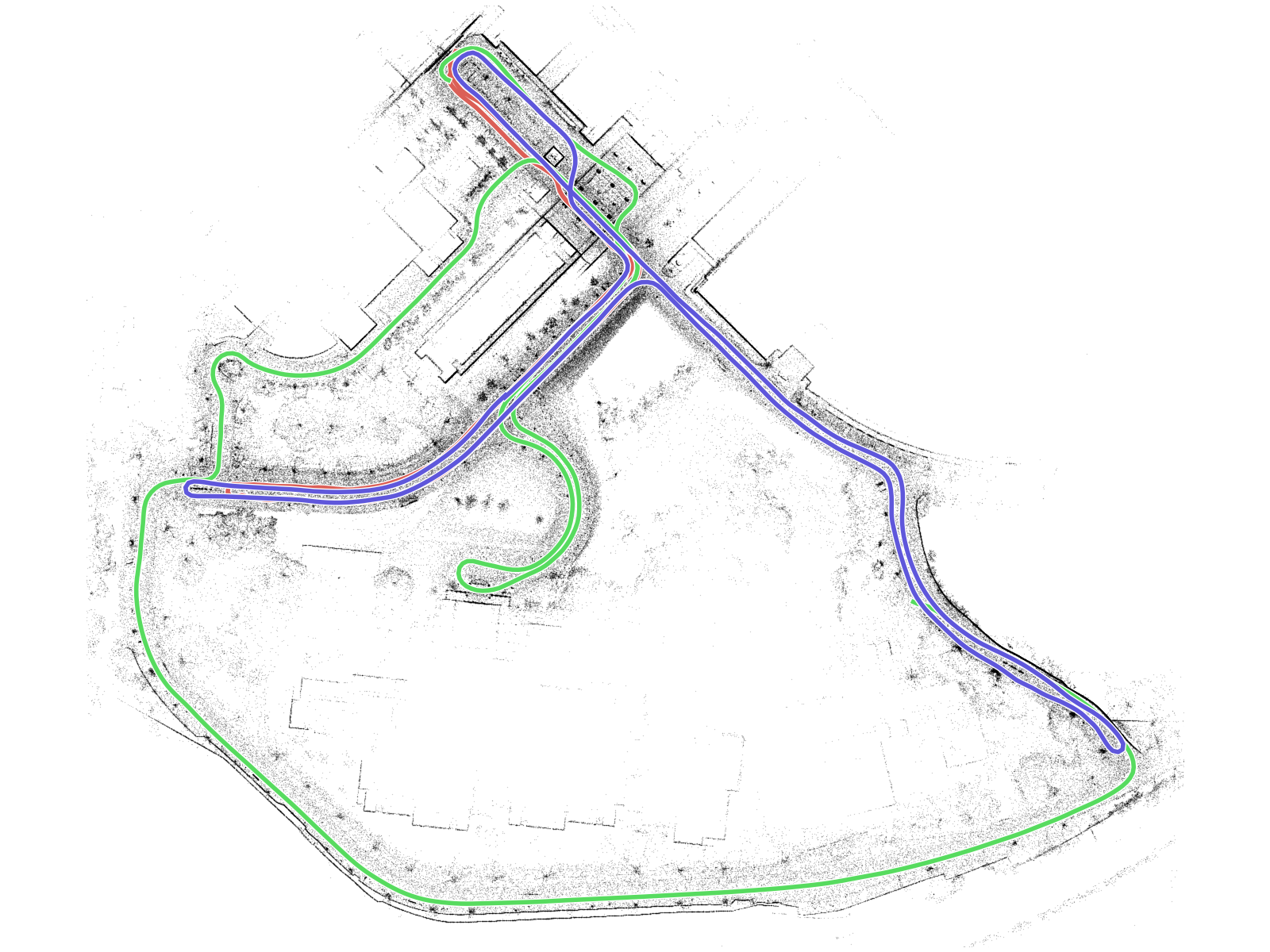

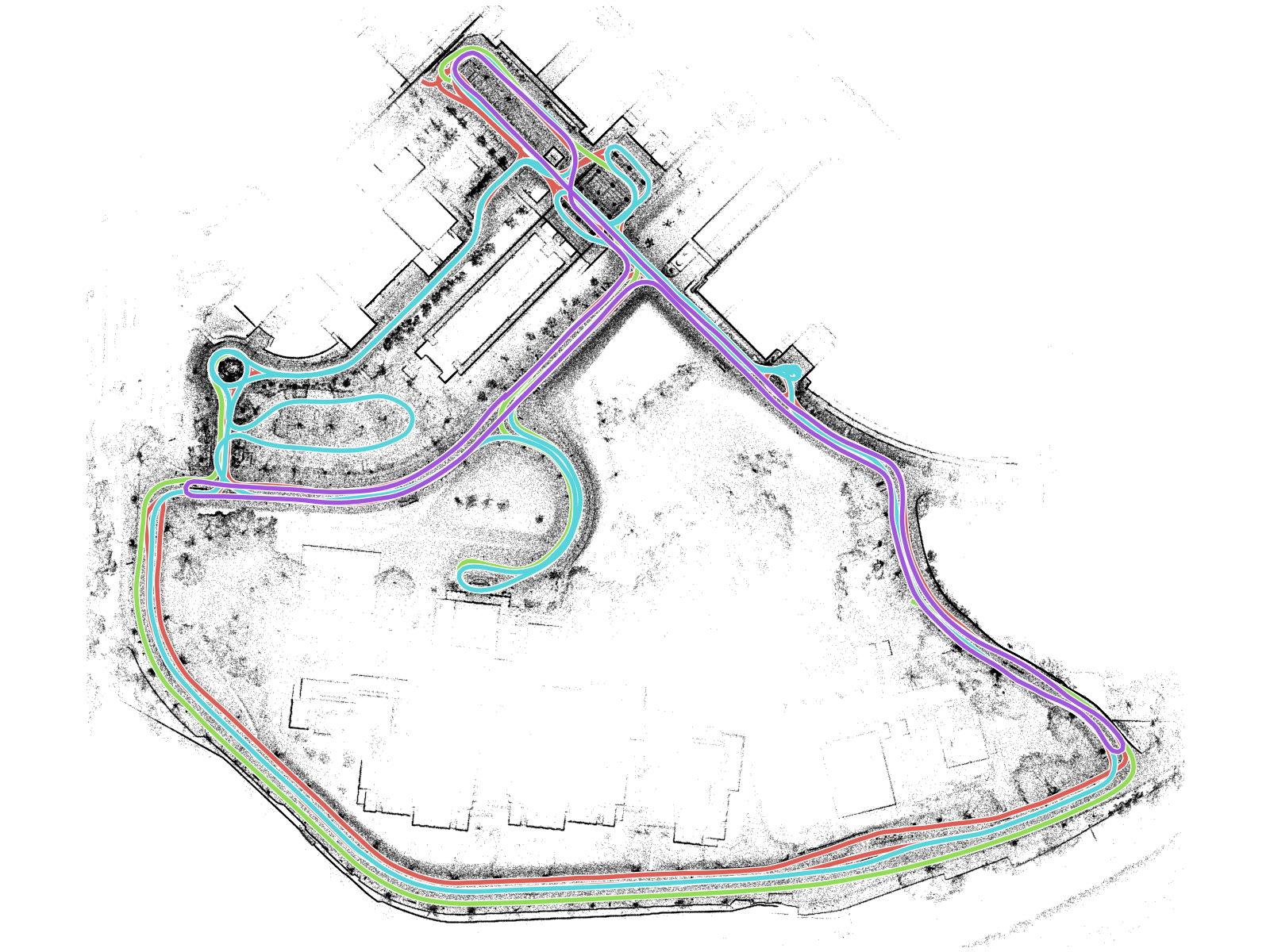

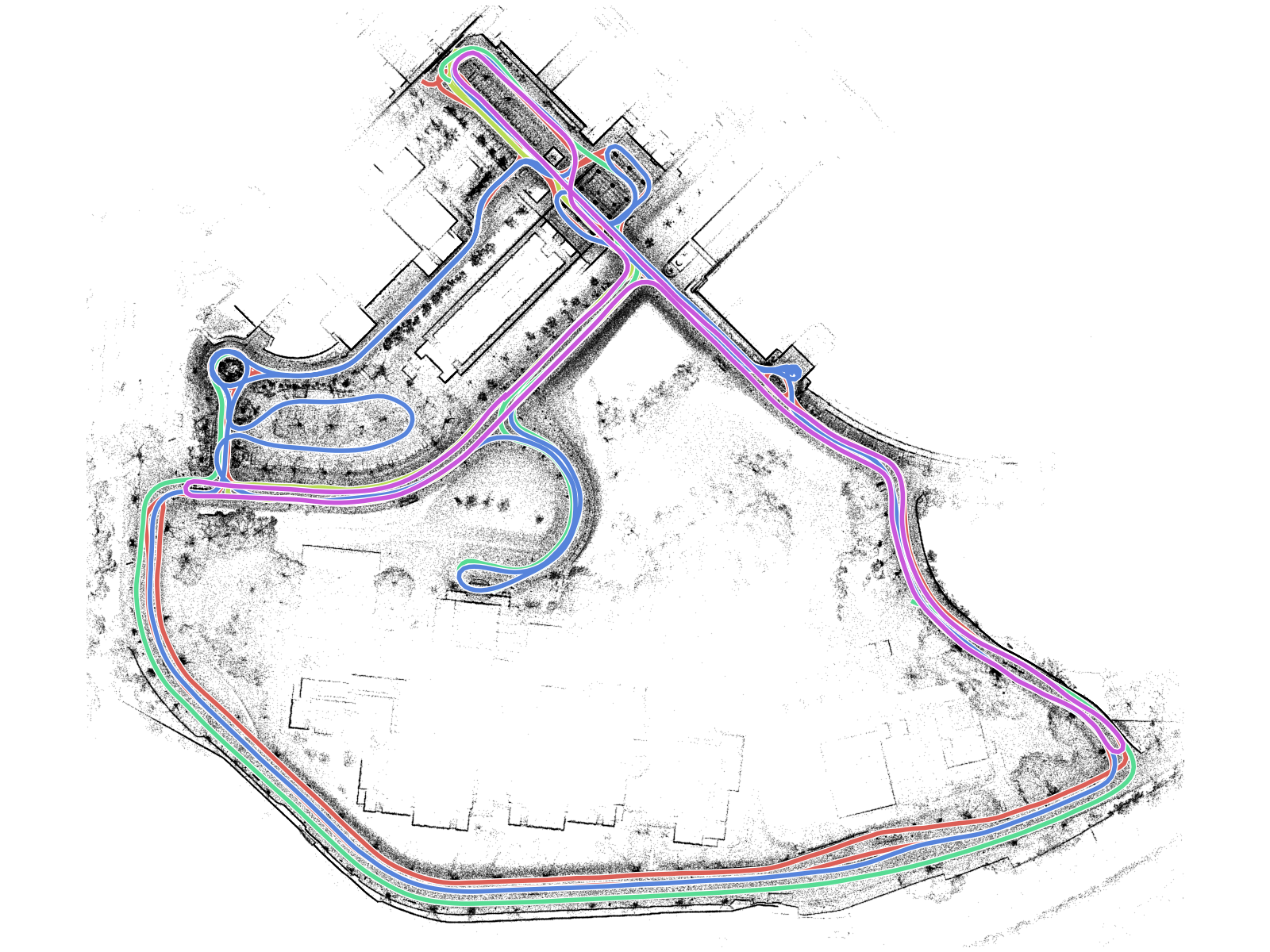

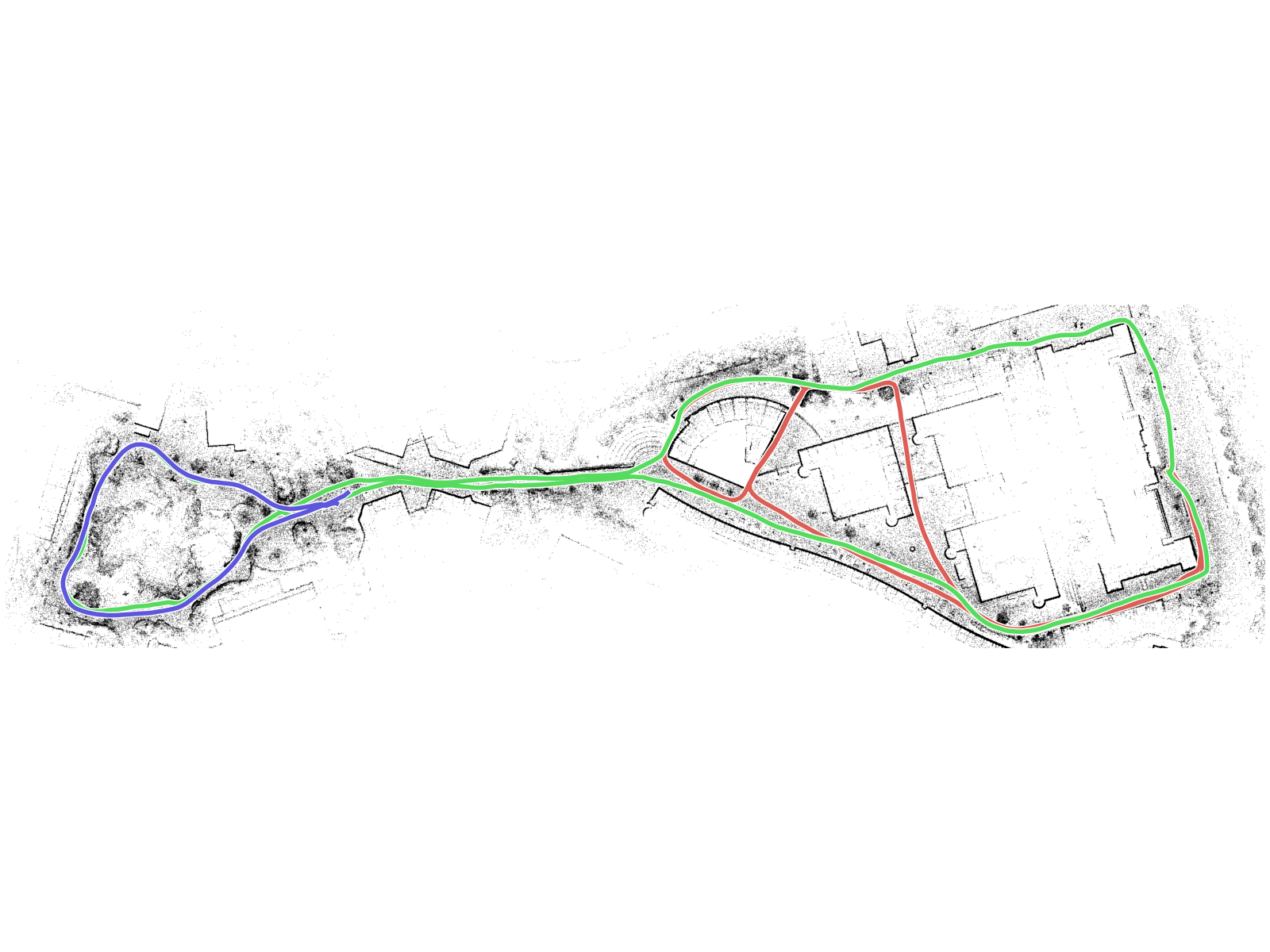

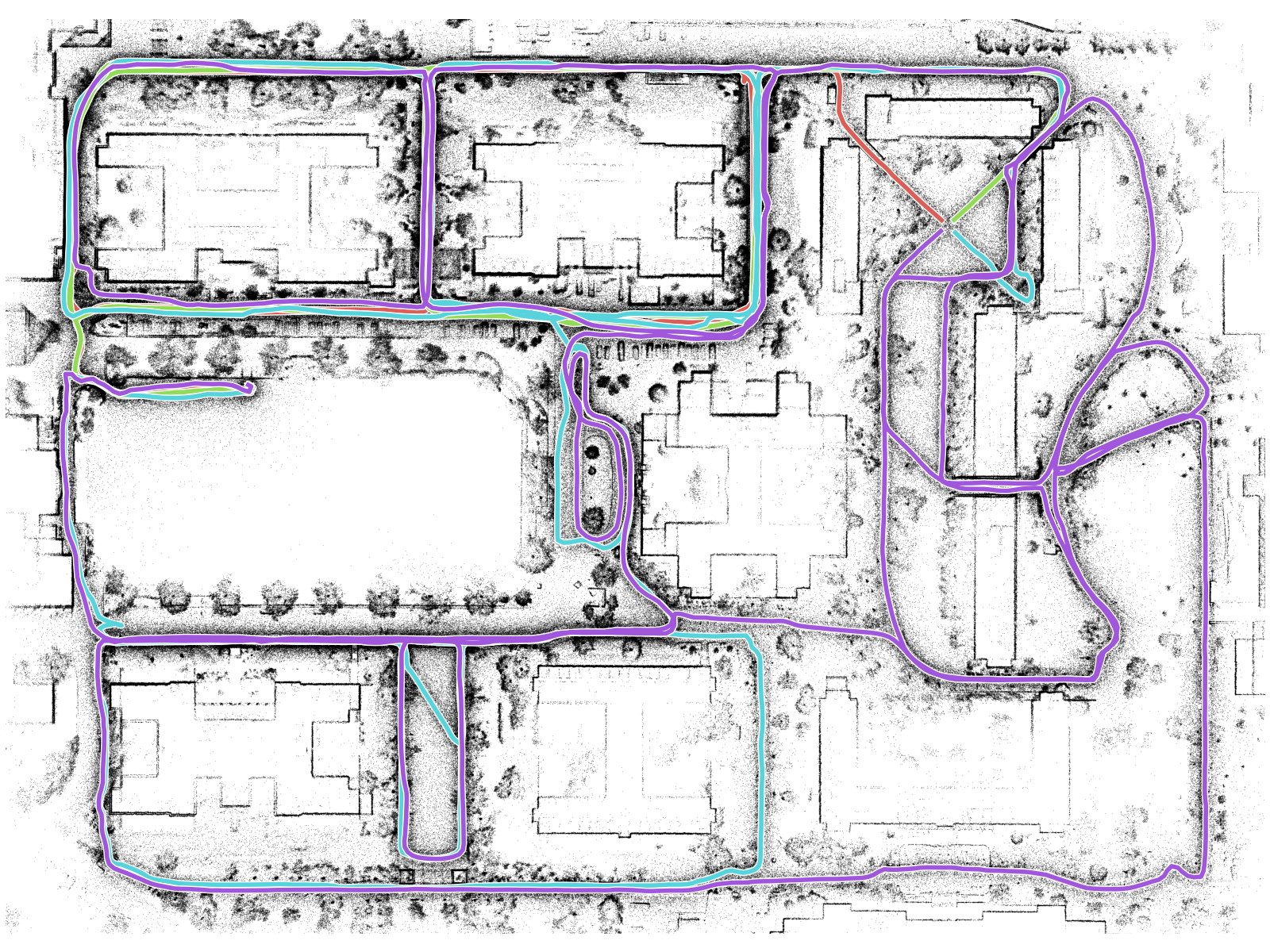

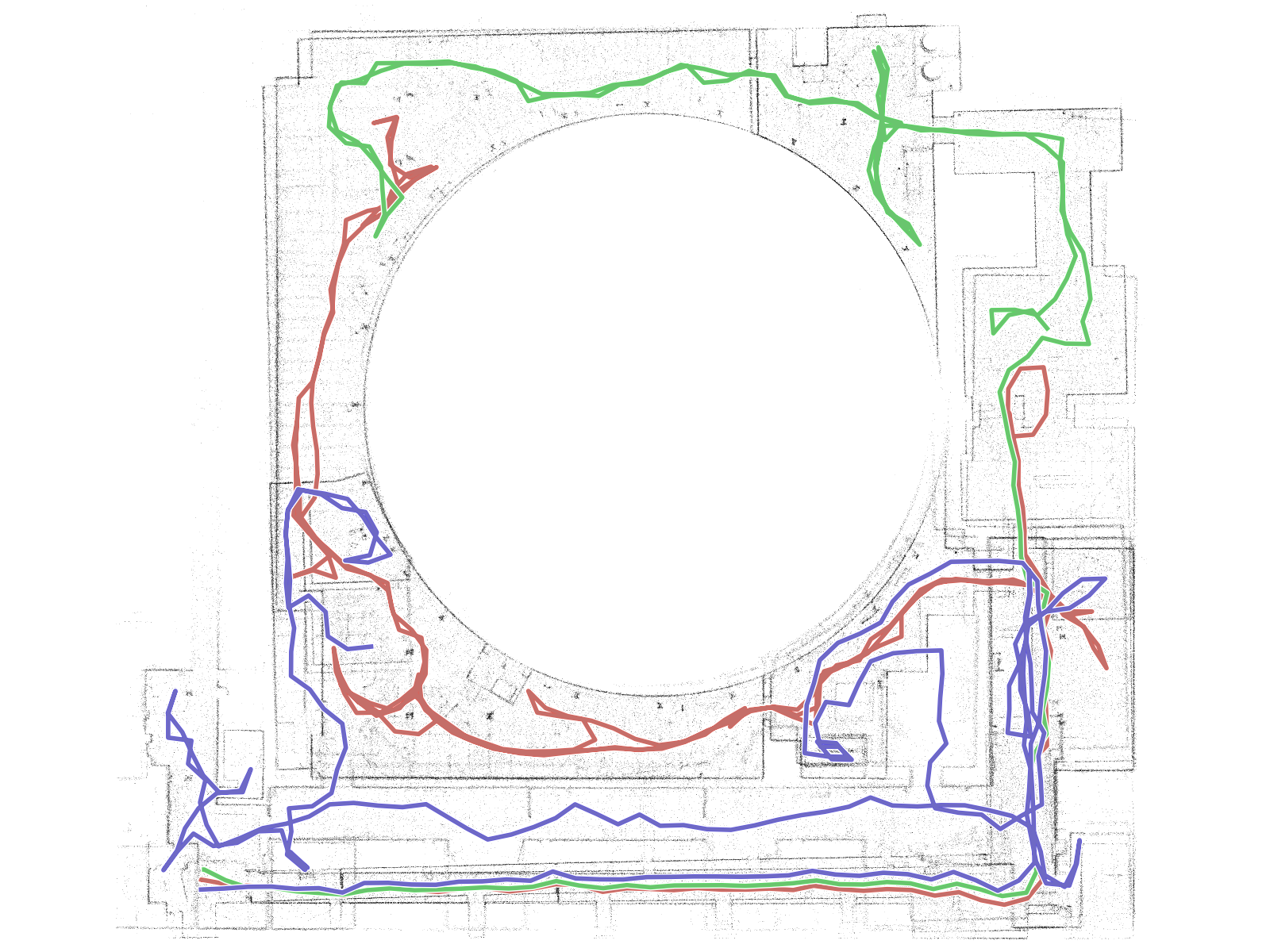

The COSMO-Bench datasets. For each sequence we plot the reference solution and enumerate metadata including – The sequence name, source data, component trials, total duration (MM:SS), total distance traveled, and the number (#) of measurements plus outlier rate (%) for both intra-robot (LC) and inter-robot (IRLC) loop-closures (#, %). For each sequence we generate a dataset using both the Wi-Fi and Pro-Radio communication model for a total of 24 datasets. Component trial names are shortened for brevity – “D” for “Day” and “N” for “Night” for the MCD data and “K” for “Kittredge Loop” and “M” for “Main Campus” for the CU-Multi data. Download links provide shortcut to download the dataset generated with the specified communication model (Wi-Fi or ProRadio).

NOTE (September 15th 2025) - Shortly after making this data available we were notified of some issues with the groundtruth of the CU-Multi data on which the kittredge and main_campus datasets are based. This issue has since been resolved and new versions of the affected datasets have been uploaded. If you are one of the handful of people that downloaded these datasets before September 15th 2025, update to the corrected versions. For users to verify that they have the correct versions of all datasets we additionally provide a checksum for all datasets. See below for instructions on how to use this to validate your copy.

| Download | Reference Solution | Details | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

||||||||||||||||

|

Wi-Fi

Pro-Radio |

|

|

Nebula Datasets

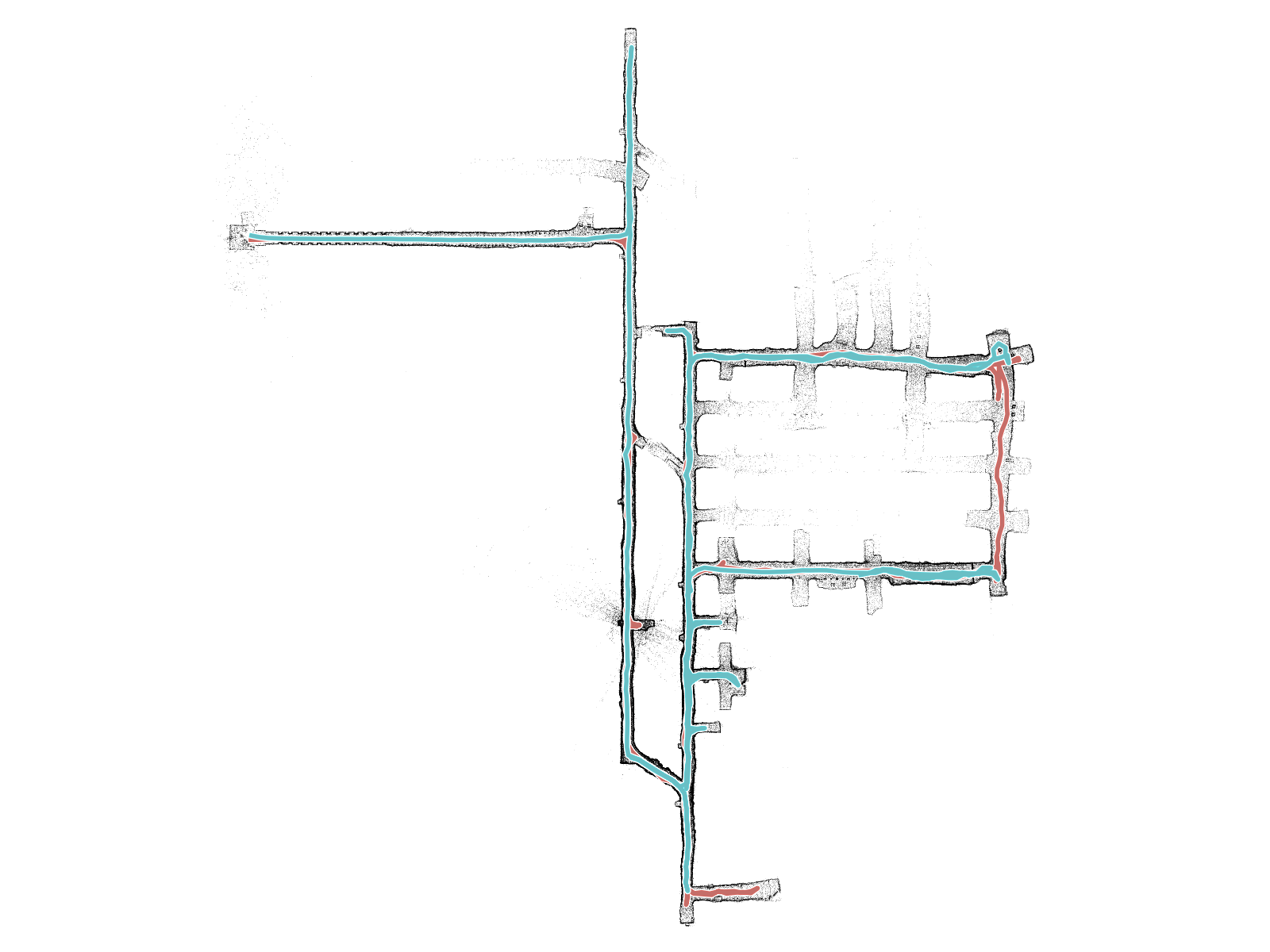

For the convenience of the community, we additionally provide the Nebula Multi-Robot Datasets in the same JRL format as our benchmarks! For each dataset, we plot the reference solution and enumerate metadata including – The dataset name, involved robots, total duration (MM:SS), total distance traveled, and the number (#) of measurements plus outlier rate (\%) for both intra-robot (LC) and inter-robot (IRLC) loop-closures (#, \%).

The original release of these datasets, in rosbag format with over-optimistic noise models can be found here.

| Download | Reference Solution | Details | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Link |  |

|

||||||||||||

| Link |  |

|

||||||||||||

| Link |  |

|

||||||||||||

| Link |  |

|

Dataset Validation

To validate that you have the correct versions of all datasets download the checksum .chk files below and place them in a directory containing cosmobench/ and nebula/ directories and run the following commands.

md5sum -c cosmobench.chk

md5sum -c nebula.chk

This will list all of the contained files and should report OK for all datasets. If you see any issues, please re-download the data.

Checksum Files:

- Checksums for COSMO-Bench Datasets: cosmobench.chk

- Checksums for Nebula Datasets: nebula.chk

Citation Information

If you use these datasets in your work please cite:

@techreport{cosmobench_mcgann_2025,

title={{COSMO-Bench}: A Benchmark for Collaborative {SLAM} Optimization},

author={D. McGann and E. R. Potokar and M. Kaess},

fullauthor={Daniel McGann and Easton R. Potokar and Michael Kaess},

year={2025},

number={{arXiv}:2508.16731 [cs.{RO}]},

institution={arXiv preprint},

type={\unskip\space},

}

If you use datasets based on the Multi-Campus Data please also cite their great dataset paper:

@inproceedings{mcd_nguyen_2024,

title = {{MCD}: Diverse Large-Scale Multi-Campus Dataset for Robot Perception},

author = {T.M. Nguyen and S. Yuan and T.H. Nguyen and P. Yin and H. Cao and L. Xie and M. Wozniak and P. Jensfelt and M. Thiel and J. Ziegenbein and N. Blunder},

fullauthor = {Thien-Minh Nguyen and Shenghai Yuan and Thien Hoang Nguyen and Pengyu Yin and Haozhi Cao and Lihua Xie and Maciej Wozniak and Patric Jensfelt and Marko Thiel and Justin Ziegenbein and Noel Blunder},

booktitle = Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR),

pages = {22304--22313},

year = {2024},

address = {Seattle, {US}},

}

If you use datasets based on the CU-Multi Data please also cite their great dataset paper:

@TechReport{cumulti_albin_2025,

title = {{CU-Multi}: A Dataset for Multi-Robot Data Association},

author = {D. Albin and M. Mena and A. Thomas and H. Biggie and X. Sun and D. Woods and S. McGuire and C. Heckman},

fullauthor = {Doncey Albin and Miles Mena and Annika Thomas and Harel Biggie and Xuefei Sun and Dusty Woods and Steve McGuire and Christoffer Heckman},

year = {2025},

number = {{arXiv}:2505.17576 [cs.{RO}]},

institution = {arXiv preprint},

type = {\unskip\space},

}

If you use our versions of the Nebula Datasets please also cite the original Nebula paper:

@article{lamp2_chang_2022,

title = {{LAMP} 2.0: A Robust Multi-Robot {SLAM} System for Operation in Challenging Large-Scale Underground Environments},

author = {Y. Chang and K. Ebadi and C.E. Denniston and M.F. Ginting and A. Rosinol and A. Reinke and M. Palieri and J. Shi and A. Chatterjee and B. Morrell and A. Agha-mohammadi and L. Carlone},

fullauthor = {Yun Chang and Kamak Ebadi and Christopher E. Denniston and Muhammad Fadhil Ginting and Antoni Rosinol and Andrzej Reinke and Matteo Palieri and Jingnan Shi and Arghya Chatterjee and Benjamin Morrell and Ali-akbar Agha-mohammadi and Luca Carlone},

journal = IEEE Robotics and Automation Letters (RA-L),

year = {2022},

volume = {7},

number = {4},

pages = {9175-9182},

}